News

Youtube Kanal CG

Project Group

Goals:

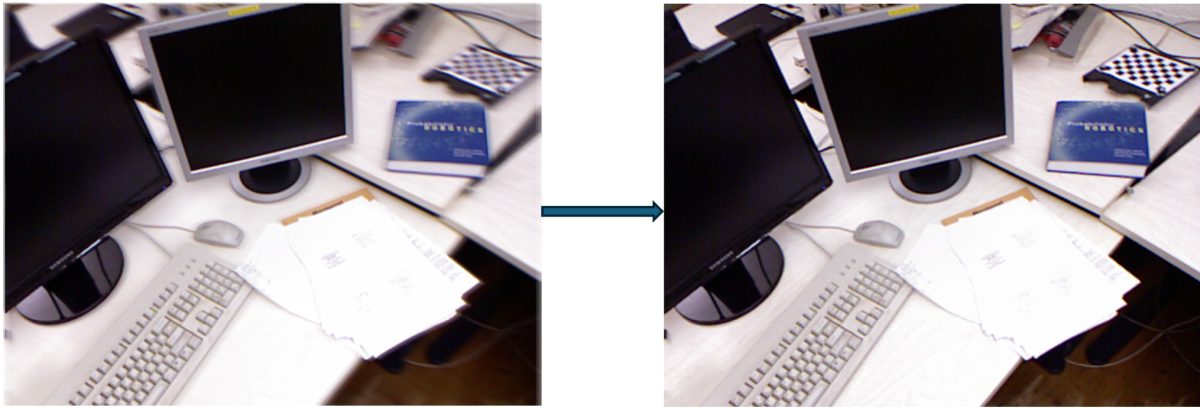

In real capture, parts of the input sequence are blurred (hand shake, rolling-shutter sweep, defocus, long exposure, fast motion). Vanilla 3DGS minimizes photometric error directly against these frames, so the blur gets baked into the radiance/appearance of Gaussians: PSNR/SSIM metrics can look fine, but novel views are soft. We want to separate scene sharpness from capture blur by learning a differentiable image-space blur that explains the observation, while keeping the underlying 3D model crisp.

Idea:

Render a sharp image from the current 3DGS state, then pass it through a learned blur module that models the camera’s point-spread function (PSF) or motion-blur trajectory. We fit the blur module to each camera/view-point, so supervision from blurry frames flows primarily into blur parameters—not into the 3D Gaussians. Maybe have certain regularization term to enforce this separation or to ensure sharpness of 3DGS output.

Possible Approaches:

- Parametric PSF using gaussian kernel, defocus kernel, motion blur kernel, etc tied to camera position/view-point.

- Tiny MLP module which predicts a NxN kernel for convolution, modelling sort of a motion-blur field.